Overview

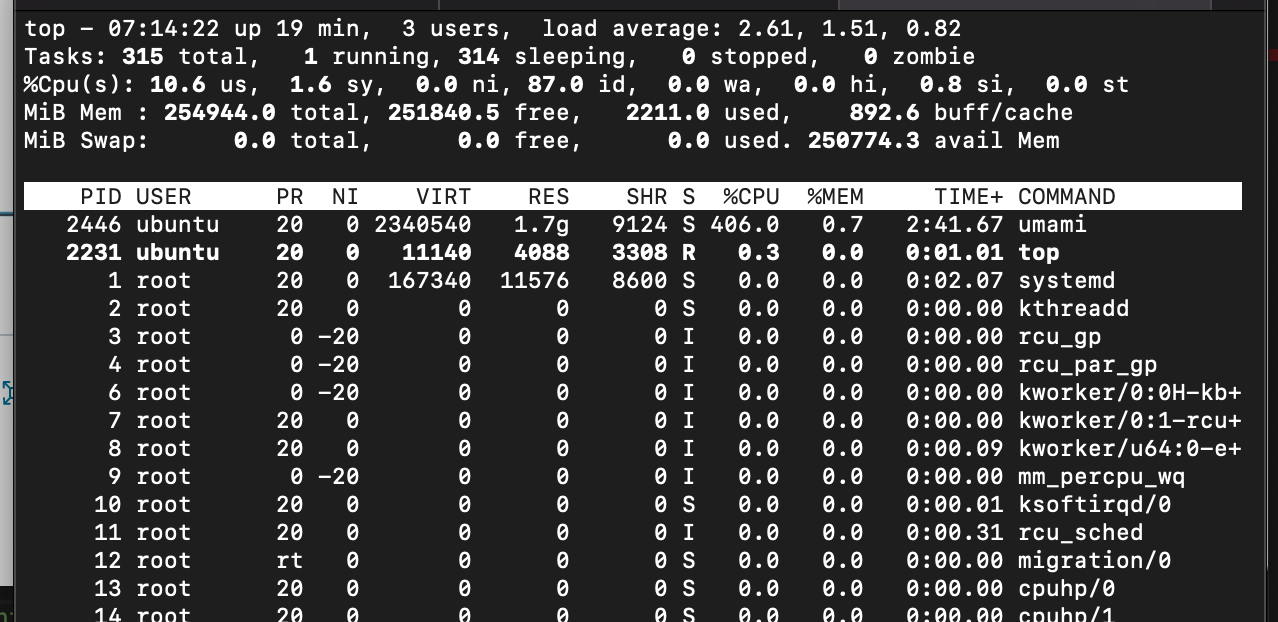

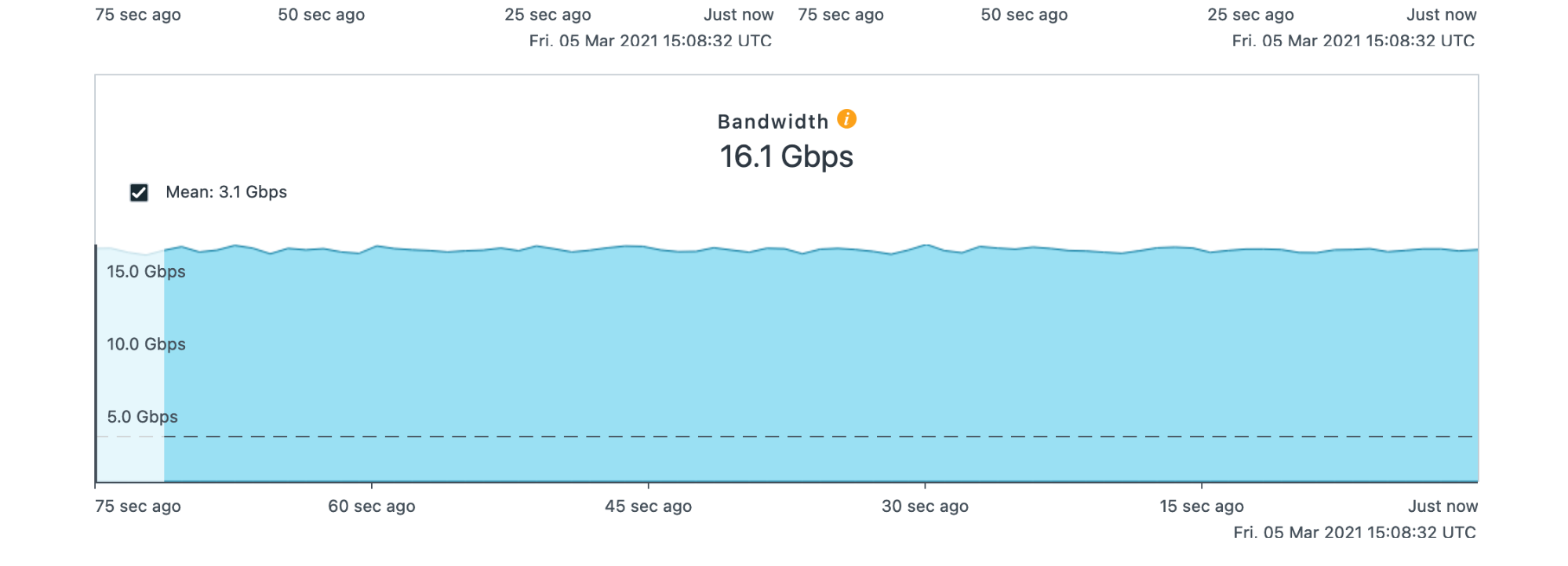

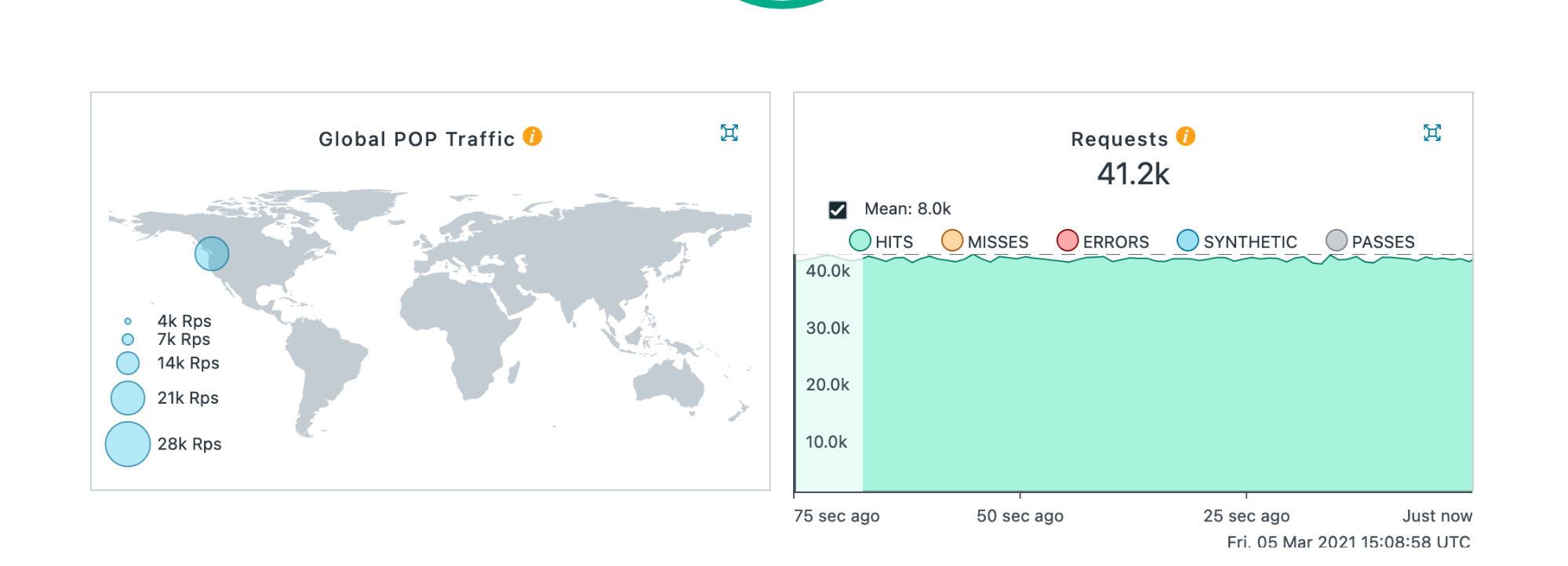

Experimenting with running Goose load tests from AWS, Goose has proven to make fantastic use of all available system resources, so that it is only generally limited by network speeds. A smaller server instance was able to simulate 2,000 users generating over 6,500 requests per second and saturating a 2.6 Gbps uplink. As more uplink speed was added, Goose was able to scale linearly -- by distributing the test across two servers with faster uplinks, it comfortably simulated 12,000 active users generating over 41,000 requests per second and saturating 16 Gbps.

Generating this much traffic in and of itself is not fundamentally difficult, but with Goose each request is fully analyzed and validated. It not only confirms the response code for each response the server returns, but also inspects the returned HTML to confirm it contains all expected elements. Links to static elements such as images and CSS are extracted from each response and also loaded, with each simulated user behaving similar to how a real user would. Goose excels at providing consistent and repeatable load testing.

The Load Test

Server-side, the load test is running against the Umami demonstration profile included with Drupal 9. The Umami demo generates an attractive and realistic website simulating a food magazine, offering a practical example of what Drupal is capable of. The demo site is multi-lingual and has quite a bit of content, multiple taxonomies, and much of the rich functionality you'd expect from a real website, making it a good load test target. We enabled the Fastly content delivery network module and configured the site so that all pages and static assets are cached in the CDN.

Goose includes an example Umami load test in the codebase which was used to generate the numbers presented here. It simulates three different types of users: an anonymous user browsing the site in English, an anonymous user browsing the site in Spanish, and an administrative user that logs into the site. The two anonymous users visit every page on the site. For example, the anonymous user browsing the site in English loads the front page, browses all the articles and the article listings, views all the recipes and recipe listings, accesses all nodes directly by node id, performs searches using terms pulled from actual site content, and fills out the site's contact form. With each action performed, Goose validates the HTTP response code and inspects the HTML returned to confirm that it contains the elements we expect.

For each page returned, the load test finds all local assets defined in the HTML such as CSS, JavaScript, and images, and loads all of them similar to how a real user's web browser would. The Rust language allows us to efficiently extract elements from the page, such as is required when posting and processing forms, whereas when writing and performing large load tests in other languages we've been limited in how much processing can happen before we become CPU-starved.

During initial testing, the first bottleneck encountered was with DNS lookups. This was solved by adding the host and IP address being load tested to /etc/hosts. The second issue was limitations on open file descriptors, quickly solved by increasing the ulimit -n for the operating system load test user. Beyond that, for this load test it was important to first run at a lower rate for a while to sufficiently warm the Drupal application cache, letting the server return all possible pages and static elements which were subsequently served directly from Fastly's CDN. A few activities, such as searching the site, could use additional server-side optimization; these were temporarily disabled during these tests to prevent a bottleneck at the web server.

8 Cores And 2.5 Gbps

To begin, an Amazon 8-core c5a.2xlarge instance was selected, leveraging AMD EPYC 7002 processors. Through running a variety of tests, it was confirmed that Goose scales up nicely from 100 users to 1,000 users. A single Goose process is able to leverage all available CPU cores from a single instance, and simulating 1,000 users generates 10x as much traffic as simulating 100 users, as expected.

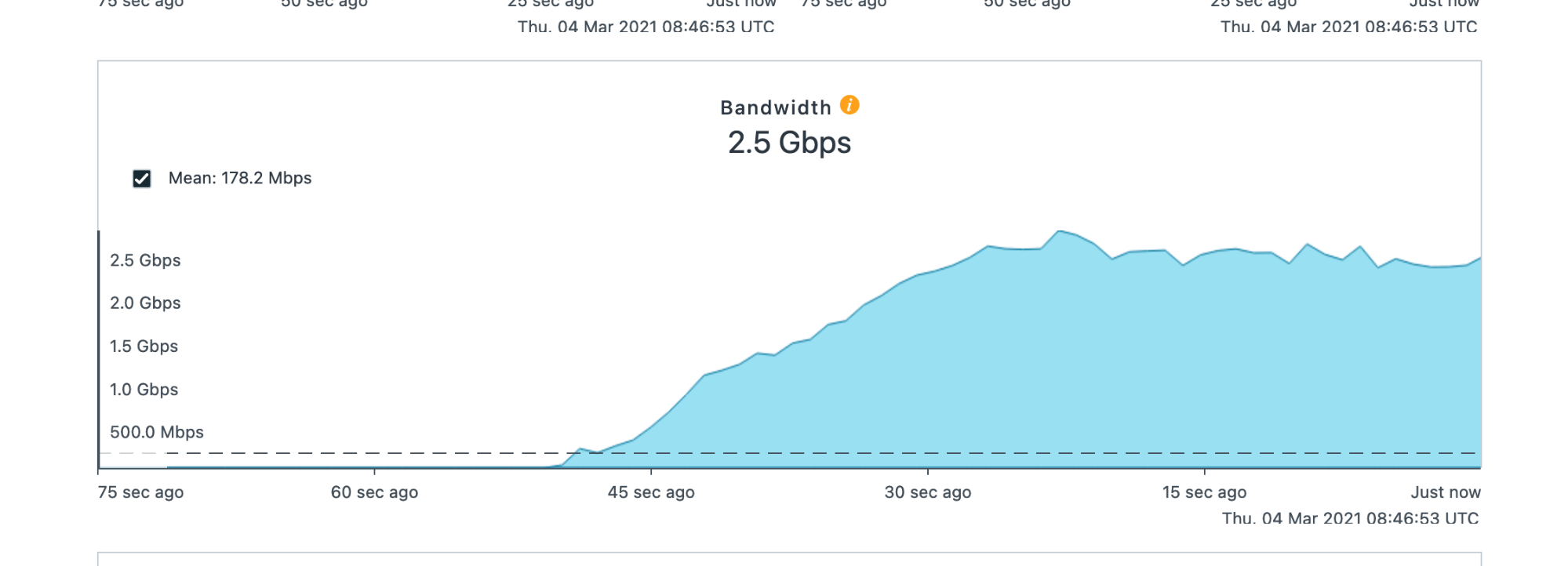

Through testing, the peak performance for this server instance simulated 2,000 users, at which point the test was throttled by the network uplink speed. Simulating 2,000 users, Goose was able to make over 6,600 requests per second, and generated a sustained 2.5 Gbps of traffic on Fastly.

Although Goose could increase the number of simultaneous users simulated with this server instance (from 2,000 to 3-4,000 or higher), the uplink prevented the test from generating more than 2.5 Gbps sustained traffic. Interestingly, the traffic load generated became more consistent and resulted in a smoother bandwidth graph thanks to Goose leveraging Rust's asynchronous capabilities. In other words, by simulating more users, there were always processes available to make additional requests whenever previous requests were waiting for a server response.

22,900 Requests Per Second From A Single Server

Once the uplink was identified as the constraint limiting load test performance, it was necessary to spin up a larger server instance with a faster uplink. The next server chosen was Amazon's 32-core r5n.8xlarge as it offered more network performance.

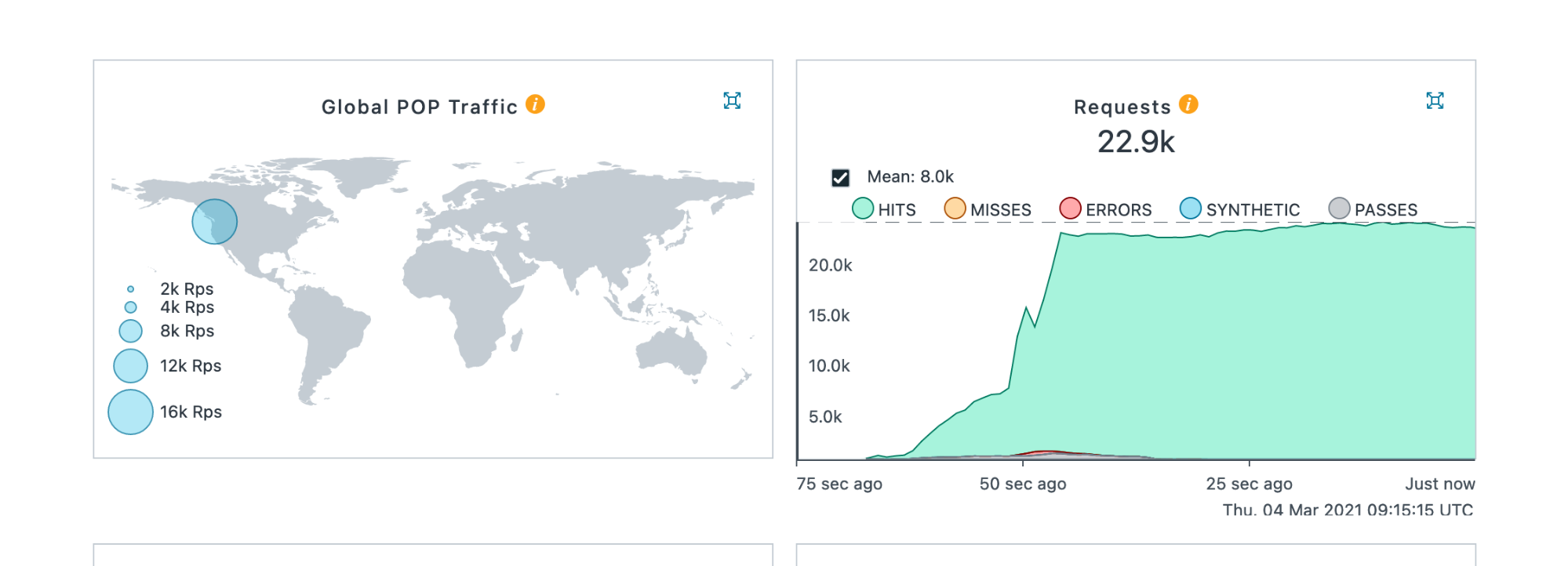

By repeatedly increasing the number of simultaneous users being simulated, it was found that this faster uplink allowed Goose to comfortably simulate 6,000 users. Collectively, the simulated users were able to make around 20,000 requests per second, and Fastly registered a consistent 8 Gbps of traffic. As always, Goose validated every request, confirming that the HTML returned contained expected titles and text, and extracted and loaded all static elements.

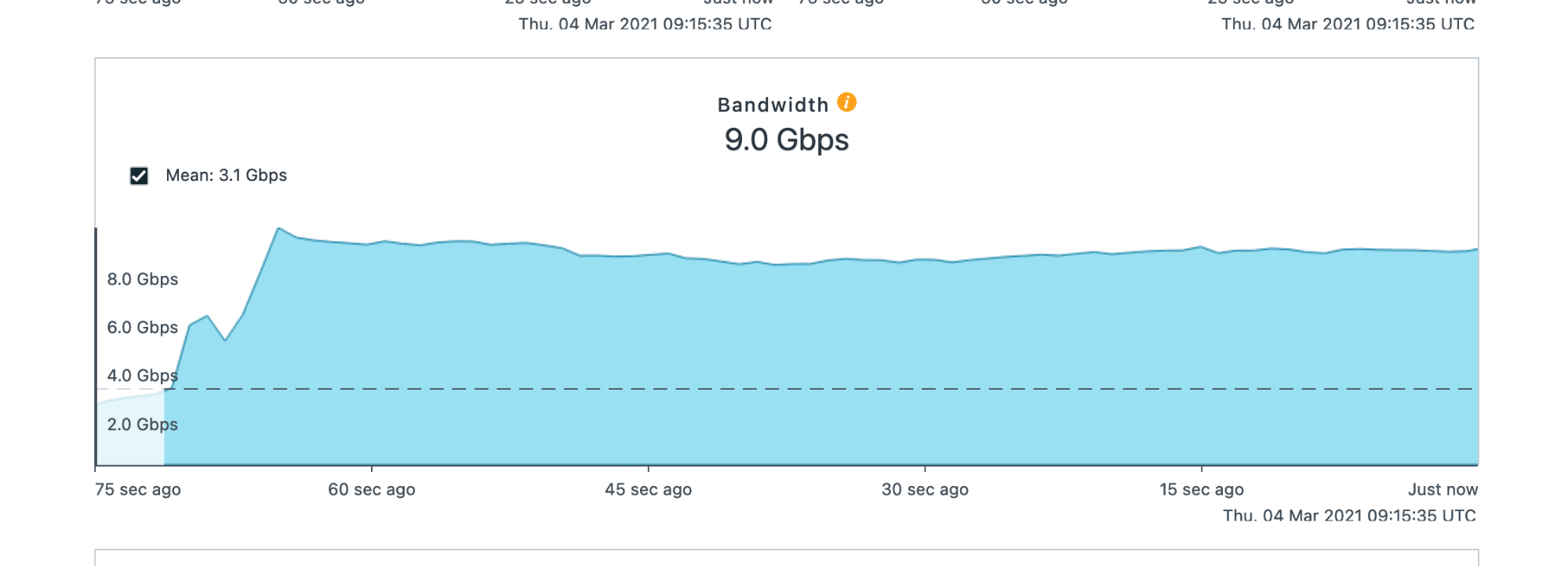

After further doubling the number of users simulated, the server's uplink maxed out at 9 Gbps. As before, the CPU had no trouble managing this many users and though the test was network constrained, the bandwidth graph smoothed out:

Fastly registered the Goose load test generating (and validating) 22,900 requests per second.

Scaling Back On A Single Server

While it was clear that the test was again throttled by the uplink, there was a remaining question as to how much CPU was actually required to perform a test at this massive scale. By progressively reducing the number of cores available to Goose, it was determined that 22 of the 32 available cores are required to avoid being CPU-throttled instead of network throttled. The number of cores available to Goose was reduced by modifying how the Tokio runtime is built in src/lib.rs, for example:

- let rt = Runtime::new().unwrap();

+ let rt = runtime::Builder::new_multi_thread()

+ .worker_threads(22)

+ .enable_time()

+ .enable_io()

+ .build()

+ .unwrap();

Further reducing the Tokio runtime to only use 2 cores, the test sustained around 1.2 Gbps of traffic, making (and validating) 3,000 requests per second. Doubling this to 4 cores, Goose was able to generate the same 6,000 requests per second as was achieved in the first test with a smaller 8-core server.

Distributed Load

As a final test, Goose was run as a Gaggle in which a Manager process controls two Workers that are together generating cumulative load. In this configuration, Goose continued to scale up linearly. On a single server, Goose simulated 6,000 users and generated a sustained 8 Gbps of traffic. With two servers, Goose was able to double those numbers: it simulated 12,000 users and generated a sustained 16.1 Gbps. As expected, there was no performance lost through the overhead of managing a distributed load test because each Worker is fully responsible for their portion of the load generated, and the Manager simply collects statistics:

During this distributed load test, the Gaggle was making and validating an impressive 41,200 requests per second.

Summary

Goose's performance is consistently impressive. It scales linearly as you add additional hardware, and is generally constrained by network speeds. Goose continues to scale linearly as you distribute the load across multiple servers and run in Gaggle mode.

The choice of Rust means your load test can comfortably perform complex logic at scale. Not only can Goose generate huge amounts of load, but each request can be properly analyzed and verified. Even at great scale your load test can leverage regular expressions or more complex helper libraries that walk the DOM and confirm that your website and infrastructure are returning valid responses.

Reach out if you'd like help leveraging Goose to ensure that your next major traffic event is a success!

See our other Goose content to learn more about this highly scalable load testing software.

Photo by Mabel Amber from Pixabay